|

AJA NTV2 SDK

17.6.0.2675

NTV2 SDK 17.6.0.2675

|

|

AJA NTV2 SDK

17.6.0.2675

NTV2 SDK 17.6.0.2675

|

This page describes a few different techniques of capturing and playing real-time video:

Coming soon.

Playout Demos:

Coming soon.

Coming soon.

Capture Demos:

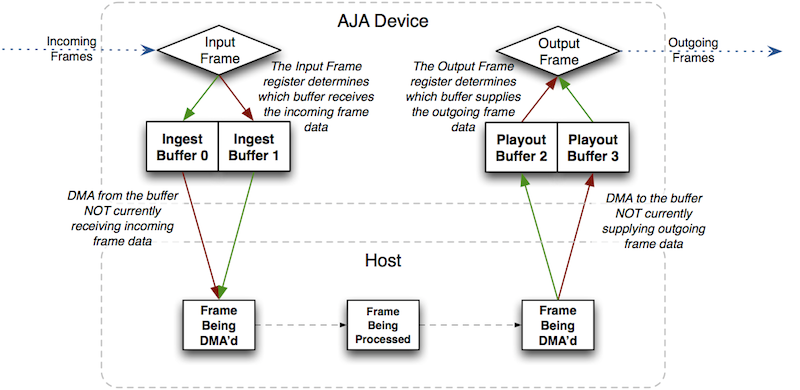

The “ping-pong” method is a good choice when lower latency is required. The illustration shows a scenario that captures incoming video frames, alters the captured frame in some way (e.g., “burn” an image onto it), and then plays the altered frames. One thread would, at every input VBI, flip the Input Frame between 0 and 1, and transfer the just-captured frame to the host. The other thread would, at every output VBI, flip the Output Frame between 2 and 3, and transfer a finished frame from the host to the off-screen buffer on the device. This should result in a three frame latency.

By getting “closer to the metal” with this technique, the application designer must manage every detail … making DMA transfers at the right time, keeping the audio synchronized with the video, getting/setting timecode, etc.

Any unexpected delays or slow-downs in frame processing or DMA transfers will cause visual distortion.

The low-latency model can support either field or frame mode processing. In this model, a time-critical user-mode thread is used to manage capture and/or playback in real-time.

For capture, full frames or separate fields can be transferred immediately after the VBI is received. Audio can be transferred based on the actual number of audio samples that have been written into the device audio buffer.

For playback, frames written to the device frame buffer can be set to go on-air any time before the VBI. Field-mode processing here is a little tricky, as once the first field is on-air, the second field will go on-air ready-or-not, so the application must make some real-time decisions if it needs to drop a field. Low-latency client software can be written with a delay parameter that would allow for some variation in the application’s video processing time while maintaining a fixed delay, but it may not provide the reliability of the kernel queuing model.

Some relevant API calls:

Ping-Pong Capture/Playout Demos:

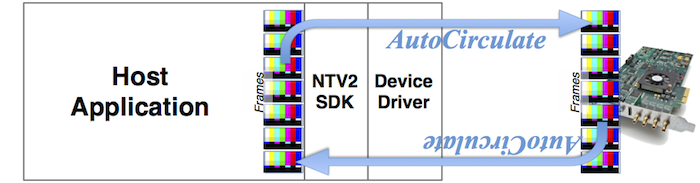

AutoCirculate is an API in the CNTV2Card class which allows real-time video streaming on a non-real-time host operating system. Video frames are buffered between the application software and the AJA device driver, and the driver is responsible for playing out (or capturing) those frames at the correct time.

AutoCirculate makes it easy to stream video & audio data into and out of your application.

AutoCirculate keeps SDI Ancillary Data such as Embedded Audio and Timecode synchronized with the AutoCirculating video frames.

The AJA device driver performs the bulk of the work involved in streaming audio and video to/from the AJA hardware. It helps to synchronize the audio with the video, detect dropped frames while streaming, time-stamps the frame data, reads or writes embedded timecode, and manages many other details.

The AutoCirculate API coordinates the transfer of video and audio data between the AJA driver and the host application as the driver circulates through its designated frame set (which is a contiguous block of frames in device SDRAM). For recording, the driver cycles through its associated input frames, and on playback, the driver cycles through its associated output frames. For example, an AutoCirculate capture using 7 frames would record each incoming frame one at a time into device SDRAM in frames 0…6, 0…6, 0…6 ad infínitum, continually and repeatedly reusing those frame “slots” in its memory. The key is for the host application to continually transfer the captured frames from device memory into host memory fast enough to keep up with the incoming video being recorded on the device.

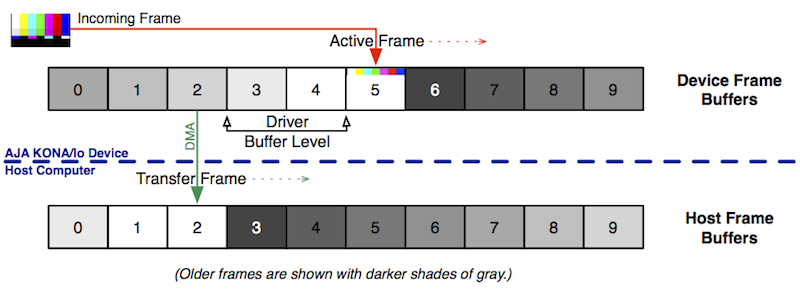

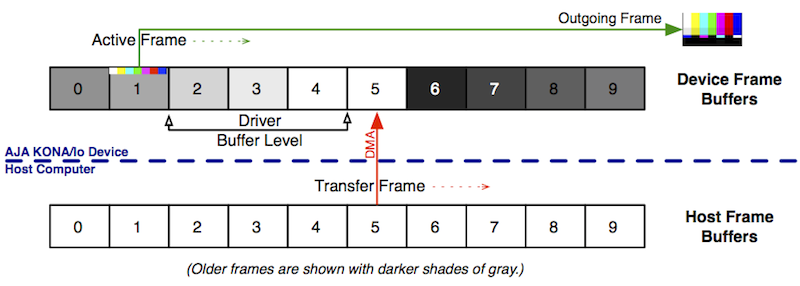

Here’s an example of how AutoCirculate works in capture mode using 10 frame buffers:

This illustrates a moment-in-time snapshot of AutoCirculate capture in action:

As each Incoming Frame streams into the AJA device, its image data is recorded into the Active Frame in device SDRAM. In addition, the frame’s audio and other ancillary data can also be recorded into device SDRAM:

While AutoCirculate is running, frames must be transferred on demand by the client application using Direct Memory Access (DMA) from the device frame memory to host memory by calling CNTV2Card::AutoCirculateTransfer.

The Transfer Frame — i.e. the frame being transferred to the host at the host’s request — “chases” the Active Frame, with the gap between them called the Buffer Level.

To prevent “torn” or malformed images, the host application must ensure that the Transfer Frame never coincides with the Active Frame being recorded. In other words, the Buffer Level must never reach zero.

Assuming a 29.97 fps full-frame rate, the AJA driver will bump the Active Frame to the next frame slot every 33 milliseconds. As long as the host application that’s transferring frames takes less than 33 milliseconds (including the DMA transfer time) to consume each frame, all will be well. If the host takes longer than 33 milliseconds to consume a frame, the Transfer Frame will start to fall behind, and the Buffer Level will increase. If this is allowed to continue, the Active Frame will overtake the Transfer Frame, which will result in “dropped” frames that never get transferred to the host. The dropped frame count is available from the AUTOCIRCULATE_STATUS::GetDroppedFrameCount function. (See AUTOCIRCULATE_STATUS and CNTV2Card::AutoCirculateGetStatus for more information.)

If the AutoCirculate channel was configured to capture audio, the driver will continuously monitor the incoming Audio/Video sync. If it detects a difference of more than about 10 milliseconds, it will attempt to resync the audio to the video. This resynchronization will automatically restart the audio engine, which usually results in a click or pop in the captured audio.

(See Timecode for more information about timecode capture and AutoCirculate.)

To prevent dropping frames, the application must stay within its per-frame “time budget”.

true, call CNTV2Card::AutoCirculateTransfer right away.false, call CNTV2Card::WaitForInputVerticalInterrupt to wait for a frame to become available.The more device frames dedicated to an AutoCirculate channel (specified by inFrameCount or inStartFrameNumber/inEndFrameNumber in CNTV2Card::AutoCirculateInitForInput or CNTV2Card::AutoCirculateInitForOutput), the more time the client application can be held off from transferring the next frame from the device. This increases latency, but also decreases the likelihood of frame drops.

The capture processing loop should be coded to transfer a frame whenever a frame is ready to be transferred, and should wait a frame if not. The capture Demonstration Applications provide good examples of how to do this, for example (generally):

The number of buffers waiting to be transferred from the board is available from AUTOCIRCULATE_TRANSFER_STATUS::acBufferLevel.

If the application is held off for longer than the number of frames allocated, one or more captured frames will be lost (dropped).

AutoCirculate capture demos:

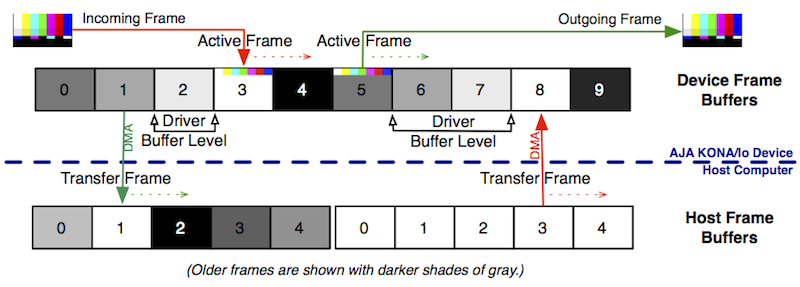

Here, AutoCirculate is in playout mode, using 10 frame buffers. Frames 6, 7, 8, 9 and 0 already played through the output jack (in that order), while frame 1 is currently being played, while frame 5 is being transferred from host memory to the AJA device.

In playout, the AJA device firmware continually streams the video content of the Active Frame buffer to the output jack.

When AutoCirculate is running, frames get transferred (DMA’d) from host memory to the Transfer Frame of the AJA device’s frame memory.

The Active Frame “chases” the Transfer Frame, with the frame gap between them called the Buffer Level.

To prevent repeated images, the host application must ensure that the Transfer Frame never collides with the Active Frame being played.

Assuming a 29.97 fps full-frame rate, the AJA driver will bump the Active Frame to the next frame slot every 33 milliseconds. As long as the host application that’s transferring frames takes less than 33 milliseconds (including the DMA transfer time) to produce each frame, all will be well. If the host takes longer than 33 milliseconds to produce a frame, the Transfer Frame will start to fall behind, and the Buffer Level will decrease. If this is allowed to continue, the Active Frame will overtake the Transfer Frame, which will result in “dropped” frames that will end up being played more than once.

The Per-Frame “Time Budget” discussed in the AutoCirculate Capture section applies equally for playout.

AutoCirculate assumes that the video and audio being transferred via CNTV2Card::AutoCirculateTransfer should be played together in-sync. It continuously monitors the sync, and if it detects a difference of more than about 10 milliseconds, it will resync the audio to the video. This resync involves restarting the audio engine, so a click or pop may be heard when it happens. The resync usually only happens when frames are dropped, during which the audio will be disabled until newly-transferred data arrives. (See Audio Playout for more information.)

During a very long playback (occurring over many hours), if too many or too few samples are transferred, the sync may drift, which may require a correction. A classic example of this would be playing a sequence of MP4 videos that have no notion of, say, the NTSC 5-frame cadence sequence. It can also easily happen when loop-playing a very short clip. One way to handle this is to track the number of video frames and audio samples delivered to AutoCirculate over the long term, then add or drop samples in the breaks between clips. Remember to reset the tracked frame and sample counts whenever the AUTOCIRCULATE_STATUS::GetDroppedFrameCount or AUTOCIRCULATE_TRANSFER_STATUS::acFramesDropped value changes (increases). See Correlating Audio Samples to Video Frames for more information.

(See Timecode for more information about timecode playout and AutoCirculate.)

AutoCirculate playout demos:

Here, AutoCirculate is simultaneously capturing from a device input and playing through a device output, while still using 10 frame buffers in all.

For this to work, two independent “channels” must be used, each with its own reserved range of frame buffers on the device, each having its own Active Frame, Transfer Frame and Buffer Level measuring the gap, if any, between them.

The client application would typically use two execution threads for performing the DMA transfers — one for capture, the other for playout.

AutoCirculate Capture/Playout Demos:

There are five principal member functions that comprise the AutoCirculate API in the CNTV2Card class:

In addition, there are several special-purpose methods that are available for more specialized applications, including:

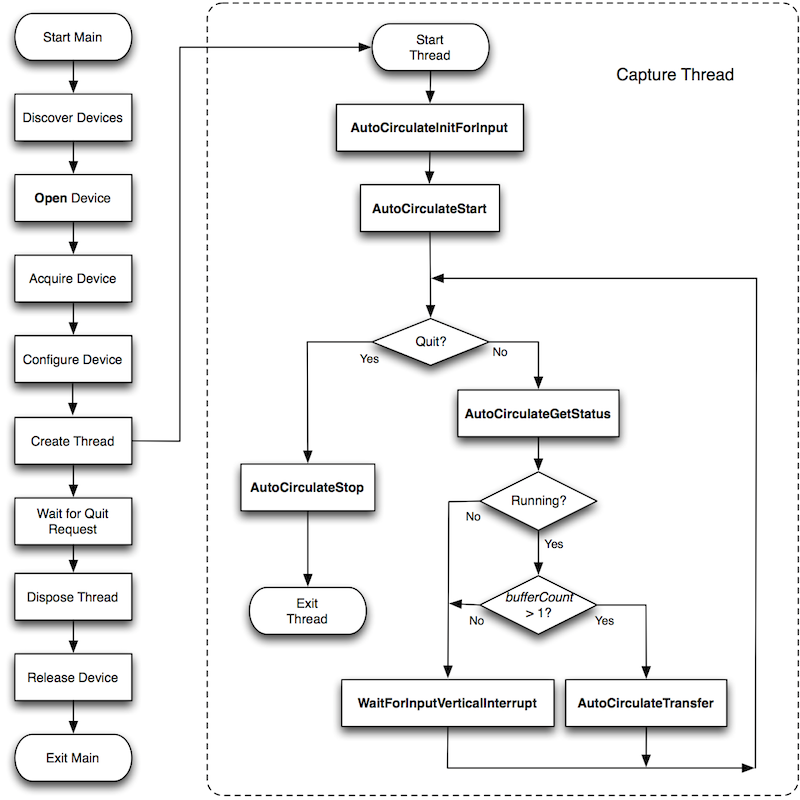

This illustrates the recommended program flow for using AutoCirculate to capture video and audio. For a GUI application, the “Main” flow of execution on the left would handle the user interface implementation. The “Wait for Quit Request” step would respond to user inputs and display capture/playout status. For a command-line utility, the “Wait for Quit Request” box would implement a loop that sleeps, waiting for a global “quit” flag to become logically “true” (and perhaps periodically emit some status information to the standard output stream).

Once the device has been acquired and configured, the main thread then creates and starts the capture thread.

The program flow surrounded by the dashed line is the capture thread. When it starts, CNTV2Card::AutoCirculateInitForInput is called to set up the AutoCirculate parameters for a specific NTV2Channel, which allocates a contiguous block of frame buffers on the card to be used by the AutoCirculate mechanism. At this point, it is started by calling CNTV2Card::AutoCirculateStart. Once started, it remains in a loop, calling CNTV2Card::AutoCirculateGetStatus to determine if AutoCirculate is in the “running” state and if at least one frame is ready to transfer. If so, AutoCirculateTransfer is called to transfer the captured frame to host memory; otherwise the thread sleeps in CNTV2Card::WaitForInputVerticalInterrupt until the next VBI occurs. This process continues until a global quit flag is set, at which point the thread calls CNTV2Card::AutoCirculateStop and exits.

After CNTV2Card::AutoCirculateStart is called, the AJA device’s Active Frame will automatically change at every VBI to cycle continuously from the starting frame to the ending frame and back again until CNTV2Card::AutoCirculateStop is called. CNTV2Card::AutoCirculateTransfer is called repeatedly to transfer a buffered video frame (plus any associated audio, timecode, SDI Ancillary Data, etc.) between the driver and the application. The Active Frame, buffer level, etc. are advanced automatically by the AJA device driver.

CNTV2Card::AutoCirculateGetStatus is used to determine whether a given channel is currently AutoCirculating, and if so, to report its current buffer level. It also supplies other data, such as its frame range (starting and ending frames) and its Active Frame.

Because AutoCirculate is wholly implemented in the driver, and is completely independent of any CNTV2Card instance that started it or that could be monitoring or controlling it, there is no provision to automatically stop AutoCirculating when any/all CNTV2Card instances have been destroyed. The driver will continue to AutoCirculate, blissfully unaware that nobody is monitoring the activity. OEMs are encouraged to be good citizens and call CNTV2Card::AutoCirculateStop at exit to reduce unnecessary driver activity.

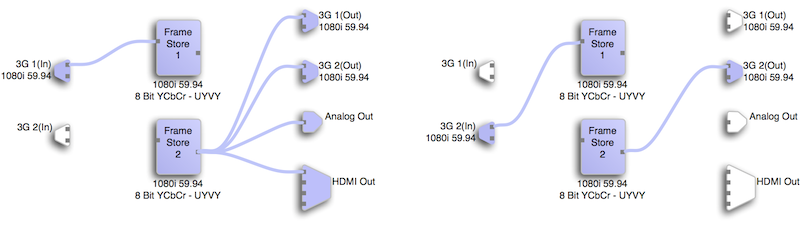

The NTV2Channel passed to CNTV2Card::AutoCirculateInitForInput (or CNTV2Card::AutoCirculateInitForOutput) identifies the device FrameStore Operation that is to be configured and operated by AutoCirculate in the device driver (i.e. NTV2_CHANNEL1 == 0 == FrameStore 1 == NTV2_WgtFrameBuffer1, NTV2_CHANNEL2 == 1 == FrameStore 2 == NTV2_WgtFrameBuffer2, etc.).

AutoCirculate is driven by vertical blanking interrupts (VBIs) received by the channel’s FrameStore Operation. If these interrupts aren’t received by the hardware, the driver’s ISR won’t get called, and AutoCirculate (for that channel) will be stuck.

To obtain the size of your host-based video buffer, in bytes (to accommodate a single frame), try…

To determine the maximum number of frame buffers a given device can accommodate for a given frame geometry and buffer format, call NTV2DeviceGetNumberFrameBuffers.

When AutoCirculate starts in playout mode (without yet transferring frames), the device firmware immediately starts playing the contents of the Active Frame to the output jack, displaying whatever YCbCr/RGB data was in the device’s frame buffer memory.

If CNTV2Card::AutoCirculateTransfer has not been called, the Active Frame and Transfer Frame remain stuck at frame zero, the Buffer Level remains at zero, and the Frames Dropped tally increments at the current frame rate.

To prevent displaying unpredictable frame(s) at playout startup (and to prevent frame drops caused by this initial frame starvation), it’s best to “preload” one or more frames before calling CNTV2Card::AutoCirculateStart.

To do this, call CNTV2Card::AutoCirculateTransfer for each frame you wish to preload into the device frame buffer. After each such call, the Transfer Frame will “bump” to the next frame slot, and the Buffer Level will increment. After preloading, you can safely call CNTV2Card::AutoCirculateStart, and continue to transfer additional frames to the device, with the goal of keeping the Transfer Frame ahead of the Active Frame being played by the hardware.

In capture mode, with a progressive video signal, immediately after the input VBI, the last-written video frame in the device’s frame buffer is guaranteed to be fully-composed. With interlaced video, this is only true after every other VBI. The following code snippet allows this phenomenon to be visualized:

With interlaced video, the standard output stream will contain a ...010101010101... pattern, whereas progressive video results in a ...111111111111... pattern.

When AutoCirculating interlaced video, the driver will not “bump” the Active Frame until it has been completely composed (i.e., until immediately after the “Field 1” VBI occurs).

It is possible (and sometimes even desireable) to transfer each field of interlaced video separately, which can be done by calling CNTV2Card::AutoCirculateTransfer every time through the loop (without checking the Buffer Level). See the NTV2FieldBurn demonstration application for more details.

Historically, AutoCirculate was always frame-based, but many customers requested the ability to operate in Field Mode. Field Mode was added in SDK 15.2.

AUTOCIRCULATE_WITH_FIELDS option when calling CNTV2Card::AutoCirculateInitForInput or NTV2Card::AutoCirculateInitForOutput.AutoCirculate’s kernel queuing model doesn’t support low-latency very well. It’s very good at reliably capturing and playing frames without dropping, as long as the frame queue is large enough to absorb user-mode latency. The kernel queue is managed once per frame just after the video VBI (or once per field, if using Field-Based Operation). This gives the client software an entire frame-time to program the hardware for the next frame (or in field mode, an entire field-time to program the hardware for the next field).

For capture, the frame written to the device frame buffer during the previous frame time is available for transfer after the vertical interrupt is received. However, audio can’t be transferred immediately, since audio is captured continuously, and all audio for the previous frame may not have been flushed to the device frame buffer yet. For this reason, AJA usually recommends transferring video and audio when there’s at least two frames in the queue — which may be unacceptable for low latency applications.

For playback, when the frame interrupt is received, the frame to display after the next VBI must be set in the hardware. Since this is a whole frame early, you essentially end up with two frames in the queue for playback as well. This is why we wrote the NTV2LLBurn Demo, a low-latency demo that works without AutoCirculate.

How many frames to circulate? This choice can depend on a number of factors that each affect how many frames are available for buffering on the device:

AutoCirculate_39 message group is enabled). For example:AutoCirculate channel 3: 85 frames (10 thru 94) exceeds 40-frame max audio buffer capacityThere are times when it’s necessary to operate additional FrameStore(s)/Channel(s) from a primary or master FrameStore/Channel. For example, to stream YUV Video and Key to two separate SDI outputs, with each YUV signal coming from its own video stream on the host computer, the Key channel/stream must be circulated coincident with the Video (fill) channel’s VBI.

This “ganging” of channels is accomplished by specifying an inNumChannels value that’s greater than 1 (the default) in the call to CNTV2Card::AutoCirculateInitForInput or CNTV2Card::AutoCirculateInitForOutput.

When operating in this fashion, one CNTV2Card::AutoCirculateTransfer call must be made for each ganged channel in the application’s frame-processing loop. For example, to operate playback channels 3 and 4 from channel 2 (the master):

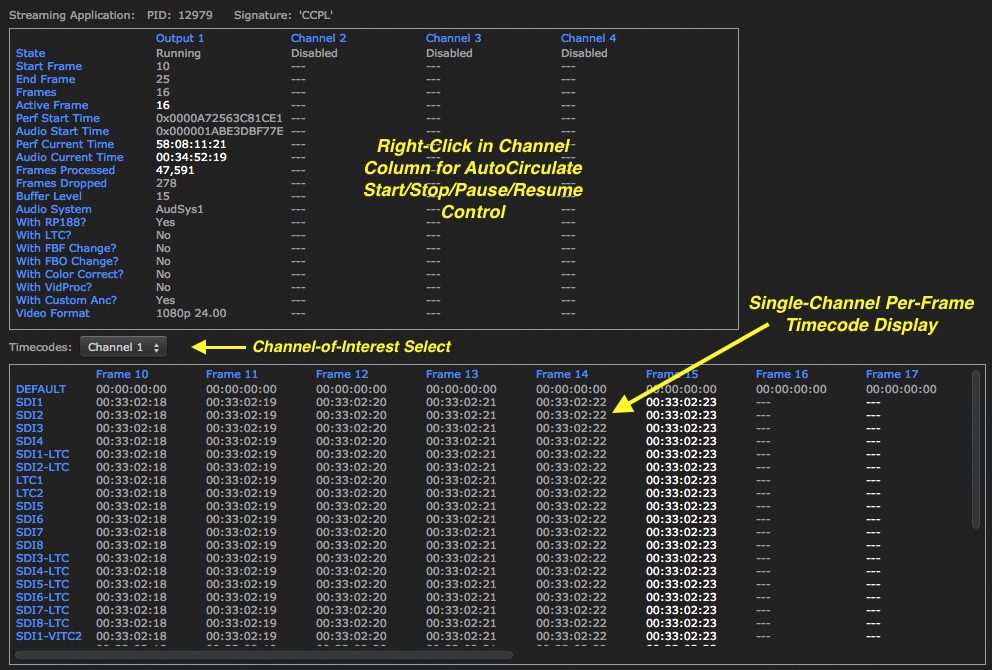

“NTV2Watcher” has an AutoCirculate Inspector that shows AutoCirculate activity in near-real-time for all possible channels on any AJA device attached to the host.

You can right-click in the AutoCirculate Channel column to pop up a menu to control AutoCirculate activity on that channel. This is handy for manually pausing, resuming, stopping or restarting AutoCirculate while your application runs.

While AutoCirculate is paused, you can inspect, in great detail…

While developing and debugging with AutoCirculate, it can be helpful to monitor its diagnostic messages, particularly when the channel is initialized (see CNTV2Card::AutoCirculateInitForInput or CNTV2Card::AutoCirculateInitForOutput). These messages can be seen in “AJA Logger” or ‘logreader’ Command-Line Utility when the AutoCirculate_39 message group is enabled, and the client application has called AJADebug::Open (usually in main()).

{channel} initialized using frames {range}Input or Output).Ch1 … Ch8).Input Ch3 initialized using frames 0-6 {channel}: FrameCount {count} ignored – using start/end {range} frame numbersInput or Output).Ch1 … Ch8).Output Ch2: FrameCount 20 ignored – using start/end 12/45 frame numbers {channel}: MultiLink Audio requested, but device doesn't support itInput or Output).Ch1 … Ch8).Input Ch1: MultiLink Audio requested, but device doesn't support itAUTOCIRCULATE_WITH_MULTILINK_AUDIO… options was used, but the AJA device doesn’t support Multi-Link Audio (32, 48, 64 Audio Channels).AUTOCIRCULATE_WITH_MULTILINK_AUDIO option. {channel}: memory overlap/interference: Frms {range}: {activities}Input or Output).Ch1 … Ch8).AC indicates an active AutoCirculate ChannelCh indicates an enabled FrameStoreAud indicates a running NTV2AudioSystemRead indicates the memory is being read;Write indicates the memory is being written.Input Ch3: memory overlap/interference: Frms 5-6: AC3 Write, AC2 Read {channel}: {count} frames ({range}) exceeds {maxCount}-frame max buffer capacity of audioSystemInput or Output).Ch1 … Ch8).AudSys1 … AudSys8.Input Ch3: 51 frames (0-50) exceeds 41-frame max audio buffer capacity {channel} has AUTOCIRCULATE_WITH_ANC set, but also has NTV2_VANCMODE_TALL set – this may cause anc insertion problemsInput or Output).Ch1 … Ch8).Output Ch4 has AUTOCIRCULATE_WITH_ANC set, but also has NTV2_VANCMODE_TALL set – this may cause anc insertion problemsAUTOCIRCULATE_WITH_ANC option. {channel}: AUTOCIRCULATE_WITH_RP188 requested without AUTOCIRCULATE_WITH_ANC – enabled AUTOCIRCULATE_WITH_ANC anywayInput or Output).Ch1 … Ch8).Output Ch2: AUTOCIRCULATE_WITH_RP188 requested without AUTOCIRCULATE_WITH_ANC – enabled AUTOCIRCULATE_WITH_ANC anywayAUTOCIRCULATE_WITH_RP188 option, but without the AUTOCIRCULATE_WITH_ANC option. Without the SMPTE ST-2110 ancillary data stream, RP188 timecode won’t be transmitted.AUTOCIRCULATE_WITH_ANC and AUTOCIRCULATE_WITH_RP188. is illegal channel valueCh9 is illegal channel value {channel}: illegal 'inNumChannels' value '{count}' – must be 1-8Input or Output).Ch1 … Ch8).Output Ch2: illegal 'inNumChannels' value '9' – must be 1-8 {channel}: FBAllocLock mutex not readyInput or Output).Ch1 … Ch8).Output Ch2: FBAllocLock mutex not readymain() was called). {channel}: Zero frames requestedInput or Output).Ch1 … Ch8).Output Ch2: Zero frames requested {channel}: EndFrame ({endFrame}) precedes StartFrame ({startFrame})Input or Output).Ch1 … Ch8).Input Ch3: EndFrame(0) precedes StartFrame(6) {channel}: Frames {range} < 2 framesInput or Output).Ch1 … Ch8).Input Ch3: Frames 1-1 < 2 frames {channel} initialization failedInput or Output).Ch1 … Ch8).Input Ch3 initialization failedStarting in SDK 17.0, a new API and KONA XM™ firmware was introduced to support asynchronous DMA streaming.

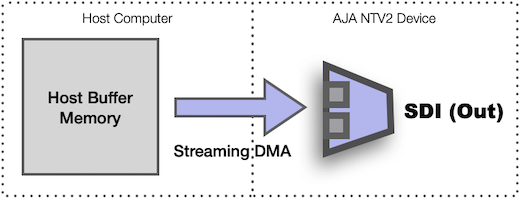

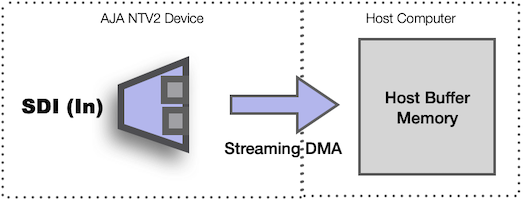

Unlike traditional block-mode DMA that transfers full-frame video between device SDRAM and host memory at full PCIe speeds, streaming DMA transfers video “slices” — small bands, or portions, of the video raster — directly to/from host memory at the video pixel rate.

The new API functions are:

Streaming DMA is ideal for use in applications that require very low (sub-frame) latency.

Unlike block-mode DMA transfers, streaming mode captures directly into host memory:

Unlike block-mode DMA transfers, streaming mode plays directly from host memory: